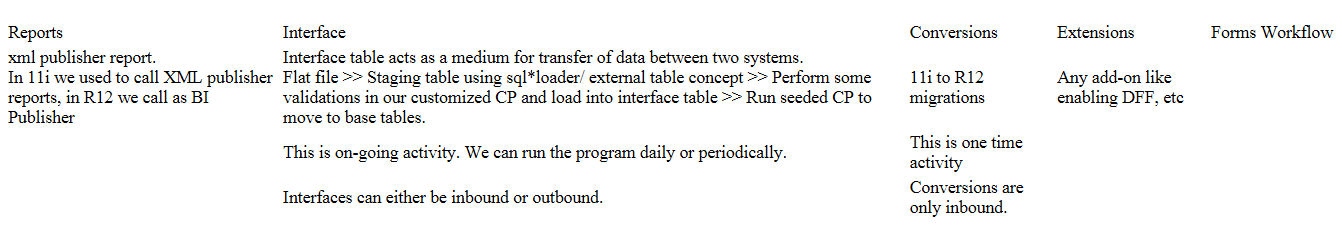

RICEFW

Index

1. RICEFW

2. RTF Template (.rtf)

3. Reports Builder (.rdf)

4. Image/Logo Path

5. Removing files from Data Definition

6. Workflow

7. Staging Tables

8. SQL*Loader

9. UTIL_FILE

10. External Table

11. Formatting Month using XDOXSLT

12. Difference between Lexical Parameter and Bind Variable in Oracle Reports

13. System Parameters used in Reports Builder

14. Discoverer Reports

1. RICEFW

2. RTF Template (.rtf)

To eradicate exponential format or to increase line spacing to fit the column in excel output use below syntax in data definition (RTF – word/excel):

<fo:bidi-override direction=”ltr” unicode-bidi=”bidi-override”><?GIVE_ELEMENT_NAME?></fo:bidi-override>

3. Reports Builder (.rdf)

RDF = Report Definition File

** RDF Components **

The main components involved in Oracle RDF report generation are:

1. Data Model: Defines the business logic and data retrieval for the report. It specifies which tables or data sources are queried, the relationships between them, and includes parameters, groups, formula columns, summary columns and placeholder columns.

2. Layout: Determines how the retrieved data is presented to the user. This involves the formatting, arrangement of fields, and overall visual structure of the report output.

3. Output Format: Specifies the format in which the report will be generated and delivered, such as PDF, HTML, Excel, XML, or text. The output format is chosen based on the requirements and the capabilities of the reporting technology.

These three components—data model, layout, and output format—form the core structure of Oracle RDF report generation, ensuring that data is accurately retrieved, properly formatted, and delivered in the desired format to end users.

** Layout Components **

The main components involved in Oracle Reports Builder layout design are:

1. Frames: Used to group and organize other layout objects, ensuring they maintain their relative positions during report generation. Frames can contain fields, repeating frames, and other frames.

2. Repeating Frames: Display repeating data, such as multiple rows from a query or group. They repeat for each record in the associated data group.

3. Fields: Represent data values from the data model (such as columns, parameters, or calculated fields) and display them in the report output.

4. Boilerplate Objects: Static elements like text, lines, rectangles, or images that are not dynamically generated from the data model but enhance the report’s appearance (e.g., titles, logos, decorative lines).

5. Anchors: Define the relative positioning of layout objects, ensuring that objects remain aligned or attached to each other as the report layout changes.

Layout Sections: The layout is divided into three main sections:

i) Header Section: Appears at the top of each page (for titles, column headings, etc.).

ii) Main Section: Contains the core data output.

iii) Trailer Section: Appears at the end or bottom of each page (for totals, summaries, footers).

Margins: Areas outside the main report body where you can place page numbers, dates, or other fields.

These components together define the visual structure, formatting, and organization of data and static content in Oracle Reports Builder’s layout design.

** Register an Oracle RDF report and run it using a concurrent program **

1. Develop and Compile the RDF Report

– Use Oracle Reports Builder (e.g., Reports 6i) to create the .rdf report as per requirements.

2. Move the RDF File to the Server

– Transfer the .rdf file from your local machine to the appropriate application top directory on the server in binary mode, typically:

– For custom apps: ‘$CUST_TOP/reports/US’

– For standard apps: e.g., ‘$PO_TOP/reports/US’ for Purchasing.

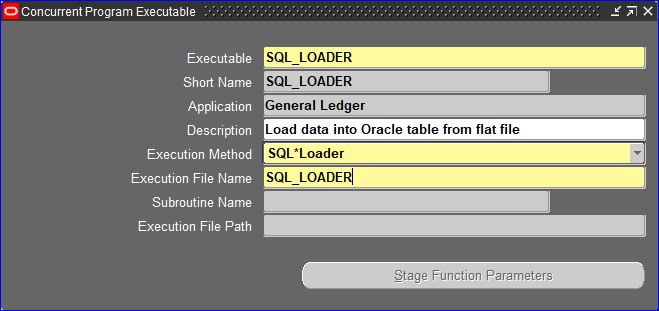

3. Register the Executable

– Log into Oracle Applications with the System Administrator or Application Developer responsibility.

– Navigate to: Application Developer → Concurrent → Executable

– Enter the following details:

– Executable Name: User-friendly name for the executable.

– Short Name: Unique identifier.

– Application: The application under which the report is registered.

– Execution Method: Select Oracle Reports.

– Execution File Name: The .rdf file name (without extension).

– Save your changes.

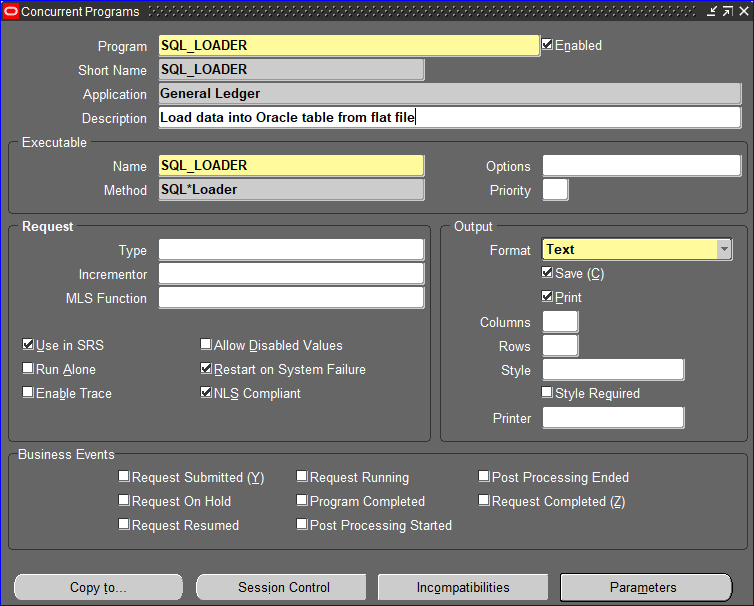

4. Define the Concurrent Program

– Navigate to: Application Developer → Concurrent → Program

– Enter:

– Program Name: Name shown to users.

– Short Name: Unique value.

– Application: Same as above.

– Executable Name: Link to the executable defined earlier.

– Output Format: Choose output type (e.g., PDF, HTML).

– Output Style: Eg. A4

– Save the program.

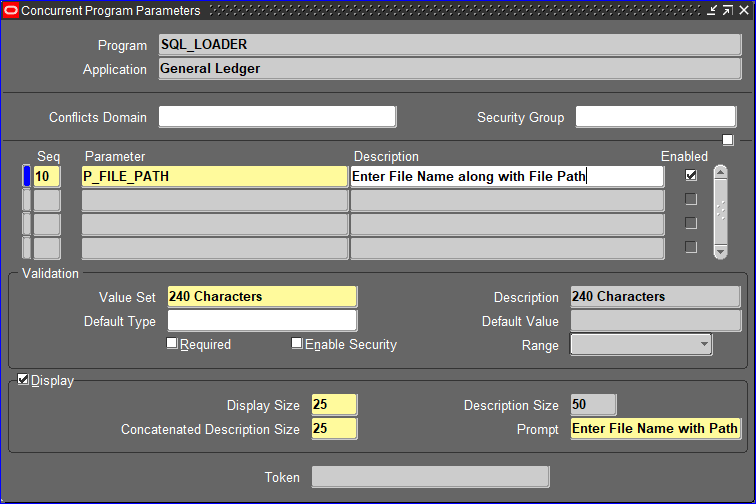

5. Define Parameters (If Needed)

– In the Concurrent Program form, click the Parameters button.

– For each parameter:

– Sequence: Order of parameters.

– Parameter: Name.

– Description: For user clarity.

– Value Set: For validation.

– Token: Must match the parameter name in the RDF report.

– Save your changes.

6. Assign the Concurrent Program to a Request Group

– Navigate to: System Administrator → Security → Responsibility → Request

– Query the relevant request group and add your concurrent program to it.

7. Assign the Request Group to a Responsibility

– Navigate to: System Administrator → Security → Responsibility → Define

– Attach the request group to the appropriate responsibility.

8. Assign the Responsibility to a User

– Navigate to: System Administrator → Security → User → Define

– Assign the responsibility to the user who will run the report.

9. Run the Report

The user logs in, selects the responsibility, and submits the report through the Standard Request Submission (SRS) form.

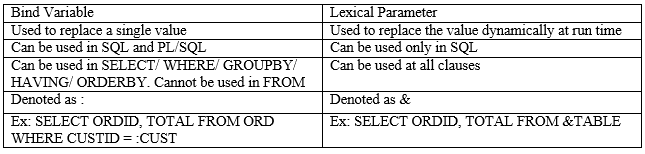

**Bind parameters and lexical parameters**

1. Bind Parameters

Purpose: Allow you to substitute a single value at runtime in your SQL or PL/SQL code.

Syntax: Identified by a colon (:) prefix (e.g., :P_DEPTNO).

Usage: Used to filter data or pass values into queries, such as in WHERE, SELECT, GROUP BY, ORDER BY, HAVING, CONNECT BY, and START WITH clauses.

Example:SELECT * FROM emp WHERE deptno = :dno

Here, :dno is a bind parameter whose value is provided at runtime.

2. Lexical Parameters

Purpose: Allow you to substitute entire SQL fragments (such as column lists, WHERE clauses, or ORDER BY clauses) at runtime.

Syntax: Identified by an ampersand (&) prefix (e.g., &where_clause).

Usage: Used for dynamic query construction, such as inserting or modifying parts of the SQL statement based on user input or logic.

Example:SELECT * FROM emp &a

Here, &a could be replaced at runtime with a string like WHERE deptno = 10 or ORDER BY ename.

**Report Triggers**

1. Before Parameter Form Trigger – Fires before the Runtime Parameter Form is displayed. Used to initialize or modify parameter values before user input.

2. After Parameter Form Trigger – Fires after the Runtime Parameter Form is displayed and user input is received. Used to validate or manipulate parameter values before report execution.

3. Before Report Trigger – Fires after queries are parsed but before the report is executed. Used for setup tasks, such as initializing variables or preparing data.

4. Between Pages Trigger – Fires before each page of the report is formatted, except the first page. Useful for custom page formatting or logic that needs to run on every page except the first.

5. After Report Trigger – Fires after the report output is sent to its destination (screen, file, printer, etc.). Used for cleanup tasks or final processing after the report is complete.

These triggers must return a Boolean value (TRUE to continue, FALSE to halt processing) and are managed in the Object Navigator of Oracle Reports Builder. They provide essential control for customizing report processing and output.

**Special Columns**

Formula Column

– Purpose: Used to perform custom calculations or logic not directly available from the database.

– How it works: You write PL/SQL code in the column’s property to compute its value. The result is calculated at runtime for each record or group, depending on where the column is placed.

– Example: Calculating commission as `salary * 0.10` or concatenating first and last names into a full name.

Summary Column

– Purpose: Used to perform standard aggregate functions such as SUM, AVG, COUNT, MIN, or MAX on other columns.

– How it works: You define the source column and the type of summary operation. The summary column automatically computes the result for a group or the entire report.

– Example: Calculating the total salary for each department using `SUM(salary)`.

Placeholder Column

– Purpose: Acts as a container to hold a value that will be assigned later, typically by a formula column or PL/SQL trigger.

– How it works: You define the column in the data model, but it does not have an initial value or calculation. Its value is set dynamically during report execution.

– Example: Storing an intermediate calculation that will be used by other columns or triggers later in the report.

4. Image/Logo Path

The images/ logos are placed in media directory and the name of the directory is OA_MEDIA.

In rtf right click on logo >> Format Picture >> Under Format Picture, select Layout & properties tab >> Go to ALT TEXT >> Under description enter value as given below:

url:{concat(‘$[OA_MEDIA]/’,.//Enter element name)}

In few instances we have to mention complete OM_MEDIA path. Get the correct path from DBA’s as this varies from instance to instance.

Ex: url:{concat(‘/abcapp/apps/apps_st/comn/java/classes/oracle/apps/media/’,.//Enter element name)}

5. Removing files from Data Definition

We wont be able to delete files from data definition from XML Publisher responsibility so have to go use below syntax.

DELETE FROM xdo_lobs

WHERE lob_code = ‘Give Data definition code’

AND lob_type = ‘BURSTING_FILE’ or ‘DATA_TEMPLATE’

Also we can download templates from backend using table xdo_lobs where files get saved in file_data column.

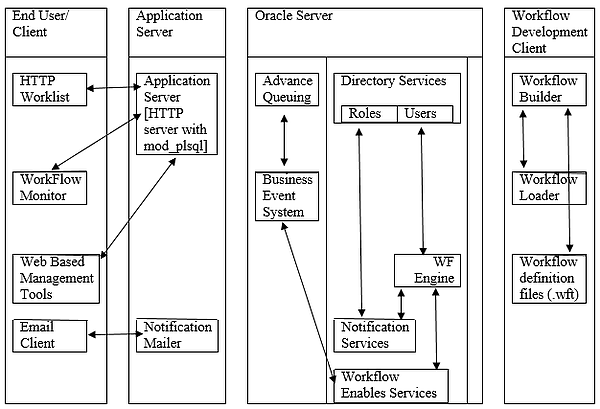

6. Workflow

Workflow Architecture

Activities in Workflow:

1. Attributes

2. Processes

3. Notifications

4. Functions

5. Events

6. Messages

7. Lookup Types

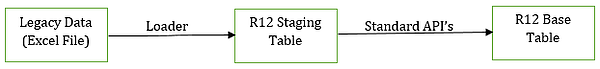

7. Staging Tables

The legacy system data can be loaded into R12 staging table using either of three concepts.

1. SQL *Loader

2. UTIL_FILE

3. External Table

4. Web ADI

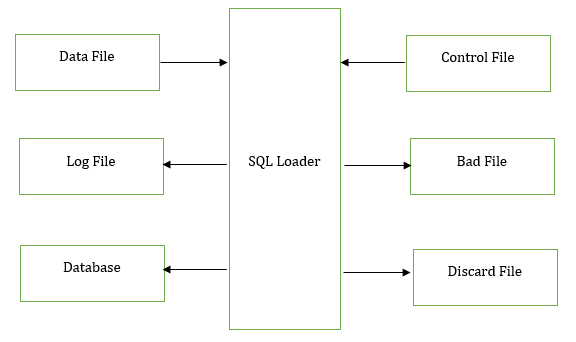

8. SQL*Loader

An utility provided by Oracle to load data from external files to database.

Here the data file and control file are input files and log file, bad file and discard file are outputs to SQL Loader.

Data File: Which contains actual data (.csv or .dat) and usually placed in path like /usr/tmp (select * from dba_directories)

Control File: Gives the format in which the data file has to be loaded and gets placed in applications top (like $APP_TOP/bin)

Log File: Contains log information like successful/ error records. This is default file.

Bad File: Invalid format records

Discard File: Rejected records

SQLLDR when execute in command prompt:

SQLLDR SCOTT/TIGER LOAD.ctl LOAD.log LOAD.bad LOAD.dat — Syntax to execute in command prompt and should be in same order. In case we use named parameters then no need to follow same order like SQLLDR USERID=SCOTT/TIGER CONTROL=LOAD.ctl DATA=LOAD.dat

Once the data is loaded into table then COMMIT gets auto executed internally.

/*Creation of Staging table*/ DROP TABLE cust_stg_tab CASCADE CONSTRAINTS; CREATE TABLE cust_stg_tab ( customer_id NUMBER ,customer_name VARCHAR2(400 BYTE) ,account_number VARCHAR2(100 BYTE) );

/*Creation of control file:*/ /*************************************************************************** -- File Name : CUSTOMER_LOAD.ctl -- Type : CONTROL FILE -- Purpose : To load Customer Data into R12 staging table ***************************************************************************/ OPTIONS (SKIP=1) /*This skips first row (column names) in data file and is optional*/ LOAD DATA CHARACTERSET 'UTF8' INFILE 'CUSTOMER_DATA.csv' /*Data file name and use INFILE only when data file and control file are placed in same folder*/ DISCARDFILE 'sample_discard_file.txt' TRUNCATE(or)APPEND INTO TABLE CUST_STG_TAB WHEN account_number = "1234" /*Staging table*/ FIELDS TERMINATED BY ',' OPTIONALLY ENCLOSED BY '"' TRAILING NULLCOLS ( CUSTOMER_ID "TRIM(:CUSTOMER_ID)" , CUSTOMER_NAME "TRIM(:CUSTOMER_NAME)" , ACCOUNT_NUMBER "TRIM(:ACCOUNT_NUMBER)" )

Save the file as .ctl extension

Data File:

Save the excel file as .csv extension

A sample data file (.csv) with two records is shown below.

| CUSTOMER_ID | CUSTOMER_NAME | ACCOUNT_NUMBER |

| 1001 | ABC Corp Limited | 1234 |

| 1002 | XYZ Corp Limited | 5678 |

Prior to sending the data file or control file to DBA’s to place at /usr/tmp path or $APPS_TOP/bin path we can test the files in Toad by placing the two files under one folder.

Open Toad >> Database >> Import >> SQL *Loader Wizard >> Check Use control file >> Next >> Browse control file under control file name >> Next >> Check Execute Now >> Click Finish >> Check the successful message in log tab.

Now check SELECT * FROM CUST_STG_TAB, two records will be shown.

→ For fixed length of data we give positions as POSITION(1:5). It consider from first character to fifth character.

→ In SQLLDR we have INTEGER datatype not NUMBER datatype.

→ For certain fields we get delimiter comma (,) but for fields we do not. Then we have to declare on that column independently. Ex: column_name datatype TERMINATED BY ‘:’

→ Declare date datatype column and mention format. column_name DATE “DD-MONTH-YYYY”

→ Auto generate sequence number. column_name SEQUENCE(MAX, 1)

→ If specific token is found at first place then use CONTINUEIF (1) = ‘*’. Here * considers as start of row.

→ If specific token is found at end then use CONTINUEIF LAST <> ‘|’. Here first row considers till end of |.

→ CONTINUEIF THIS (1:2) = ‘**’

→ Say we have emp 1234 Baker working on three depts (101, 102, 103) then we have to convert de-normalize data into normalize data.

→ NULLIF — We cannot use NVL in SQLLDR rather we have to use NULLIF. In fixed length we use NULLIF in NUMBER datatype columns and for CHAR columns its not required.

→ If we use INDEXES then its direct path loading, else it will be conventional path

→ We can manipulate data using SQLLDR

→ Multiple files data into multiple tables: File structure should be same.

SQL Loader in EBS

DROP TABLE sql_loader CASCADE CONSTRAINTS;

CREATE TABLE sql_loader (

column1 NUMBER,

column2 VARCHAR2(100),

column3 VARCHAR2(100)

);

Control file syntax: Place file in ‘/u01/app/instance_name/apps/apps_st/appl/js/12.0.0/bin’ path (custom/bin path) with .ctl as extension

options(

bindsize = 900000,

rows = 2000,

skip = 1

)

LOAD DATA

CHARACTERSET 'UTF8'

TRUNCATE INTO TABLE sql_loader

FIELDS TERMINATED BY ',' OPTIONALLY ENCLOSED BY '"'

TRAILING nullcols (

column1 "TRIM(:column1)",

column2 "TRIM(:column2)",

column3 "TRIM(:column3)"

)

Place the data file (.csv) having three columns (column1, column2, column3) in any path (/u01/oraout/instance_name/inbound/stack) and run the program by passing /u01/oraout/instance_name/inbound/stack/file_name.csv

9. UTIL_FILE

UTL_FILE.FILE_TYPE; — Pointer to find the file location

UTL_FILE.FOPEN; — Open the existing file or create a new file

UTL_FILE.PUT_LINE; — Transfer data from DB to flat file

UTL_FILE.GET_LINE; — Transfer data from flat file to DB

UTL_FILE.FCLOSE; — Closes the file

Below are UTIL_FILE Exceptions:

UTL_FILE.invalid_operation

UTL_FILE.invalid_path

UTL_FILE.invalid_mode

UTL_FILE.invalid_filehandle

UTL_FILE.read_error

UTL_FILE.internal_error

UTL_FILE.write_error

UTL_FILE.access_denied

SET SERVEROUTPUT ON

CLEAR SCREEN

/*Note

--SELECT * FROM all_directories;

--The directory name should be considered one of the value from value column in below query

--SELECT * FROM v$parameter WHERE name like '%utl_file%';

--The privilege should be execute in below query

--SELECT * FROM all_tab_privs where table_name = 'UTL_FILE';

--Check this directory has read and write privileges in below query

--SELECT * FROM all_tab_privs where table_name = 'ODPDIR';*/

DECLARE

lv_write_file_handle utl_file.file_type;

lv_fileoutput VARCHAR2(1000) := 'Outbound_Interface';

BEGIN

lv_write_file_handle := utl_file.fopen('ODPDIR','write_file.txt','W');

IF

utl_file.is_open(lv_write_file_handle)

THEN

utl_file.put_line(lv_write_file_handle,lv_fileoutput);

END IF;

IF

utl_file.is_open(lv_write_file_handle)

THEN

utl_file.fclose(lv_write_file_handle);

END IF;

IF

NOT utl_file.is_open(lv_write_file_handle)

THEN

dbms_output.put_line('File is closed successfully..!!');

dbms_output.put_line('Data ' || lv_fileoutput || ' is written successfully');

END IF;

COMMIT;

EXCEPTION

WHEN utl_file.invalid_operation THEN

dbms_output.put_line('invalid operation ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_path THEN

dbms_output.put_line('invalid path ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_mode THEN

dbms_output.put_line('invalid mode ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_filehandle THEN

dbms_output.put_line('invalid filehandle ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.read_error THEN

dbms_output.put_line('read error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.internal_error THEN

dbms_output.put_line('internal error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.write_error THEN

dbms_output.put_line('write error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.access_denied THEN

dbms_output.put_line('access denied ' || sqlerrm);

utl_file.fclose_all;

END;

SET SERVEROUTPUT ON

CLEAR SCREEN

/*Note

--SELECT * FROM all_directories;

--The directory name should be considered one of the value from value column in below query

--SELECT * FROM v$parameter WHERE name like '%utl_file%';

--The privilege should be execute in below query

--SELECT * FROM all_tab_privs where table_name = 'UTL_FILE';

--Check this directory has read and write privileges in below query

--SELECT * FROM all_tab_privs where table_name = 'ODPDIR';

--Being inbound, place a file read_file.txt with message as 'Inbound_Interface'

--Give 0777 permissions to file*/

DECLARE

lv_read_file_handle utl_file.file_type;

lv_fileinput VARCHAR2(1000);

BEGIN

lv_read_file_handle := utl_file.fopen('ODPDIR','read_file.txt','R');

IF

utl_file.is_open(lv_read_file_handle)

THEN

utl_file.get_line(lv_read_file_handle,lv_fileinput);

END IF;

IF

utl_file.is_open(lv_read_file_handle)

THEN

utl_file.fclose(lv_read_file_handle);

END IF;

IF

NOT utl_file.is_open(lv_read_file_handle)

THEN

dbms_output.put_line('File is closed successfully..!!');

dbms_output.put_line('Data ' || lv_fileinput || ' read successfully');

END IF;

COMMIT;

EXCEPTION

WHEN utl_file.invalid_operation THEN

dbms_output.put_line('invalid operation ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_path THEN

dbms_output.put_line('invalid path ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_mode THEN

dbms_output.put_line('invalid mode ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_filehandle THEN

dbms_output.put_line('invalid filehandle ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.read_error THEN

dbms_output.put_line('read error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.internal_error THEN

dbms_output.put_line('internal error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.write_error THEN

dbms_output.put_line('write error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.access_denied THEN

dbms_output.put_line('access denied ' || sqlerrm);

utl_file.fclose_all;

END;

SET SERVEROUTPUT ON

CLEAR SCREEN

/*Note

--SELECT * FROM all_directories;

--The directory name should be considered one of the value from value column in below query

--SELECT * FROM v$parameter WHERE name like '%utl_file%';

--The privilege should be execute in below query

--SELECT * FROM all_tab_privs where table_name = 'UTL_FILE';

--Check this directory has read and write privileges in below query

--SELECT * FROM all_tab_privs where table_name = 'ODPDIR';*/

DECLARE

lv_write_file_handle utl_file.file_type;

TYPE writerecords IS

TABLE OF VARCHAR2(2000) INDEX BY BINARY_INTEGER;

rec_writerecords writerecords;

BEGIN

rec_writerecords(1) := 'Record one : This is first line';

rec_writerecords(2) := 'Record two : This is second line';

rec_writerecords(3) := 'Record three : This is third line';

rec_writerecords(4) := 'Record four : This is fourth line';

rec_writerecords(5) := 'Record five : This is fifth line';

lv_write_file_handle := utl_file.fopen('ODPDIR','write_file.txt','W');

IF

utl_file.is_open(lv_write_file_handle)

THEN

FOR my_table_index IN 1..rec_writerecords.count LOOP

utl_file.put_line(lv_write_file_handle,rec_writerecords(my_table_index) );

dbms_output.put_line('Data with index ' || my_table_index || ' is ' || rec_writerecords(my_table_index) || ' written successfully');

END LOOP;

END IF;

IF

utl_file.is_open(lv_write_file_handle)

THEN

utl_file.fclose(lv_write_file_handle);

END IF;

IF

NOT utl_file.is_open(lv_write_file_handle)

THEN

dbms_output.put_line('File is closed successfully..!!');

END IF;

COMMIT;

EXCEPTION

WHEN utl_file.invalid_operation THEN

dbms_output.put_line('invalid operation ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_path THEN

dbms_output.put_line('invalid path ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_mode THEN

dbms_output.put_line('invalid mode ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_filehandle THEN

dbms_output.put_line('invalid filehandle ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.read_error THEN

dbms_output.put_line('read error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.internal_error THEN

dbms_output.put_line('internal error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.write_error THEN

dbms_output.put_line('write error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.access_denied THEN

dbms_output.put_line('access denied ' || sqlerrm);

utl_file.fclose_all;

END;

SET SERVEROUTPUT ON

CLEAR SCREEN

/*Note : This code has issue

--SELECT * FROM all_directories;

--The directory name should be considered one of the value from value column in below query

--SELECT * FROM v$parameter WHERE name like '%utl_file%';

--The privilege should be execute in below query

--SELECT * FROM all_tab_privs where table_name = 'UTL_FILE';

--Check this directory has read and write privileges in below query

--SELECT * FROM all_tab_privs where table_name = 'ODPDIR';*/

DECLARE

lv_read_file_handle utl_file.file_type;

TYPE readrecords IS

TABLE OF VARCHAR2(2000) INDEX BY BINARY_INTEGER;

rec_readrecords readrecords;

lv_read_from_file VARCHAR2(1000);

ln_my_index NUMBER(2) := 0;

BEGIN

lv_read_file_handle := utl_file.fopen('ODPDIR','read_file.txt','R');

IF

utl_file.is_open(lv_read_file_handle)

THEN

LOOP

BEGIN

utl_file.get_line(lv_read_file_handle,rec_readrecords(ln_my_index + 1) );

ln_my_index := ln_my_index + 1;

EXCEPTION

WHEN no_data_found THEN

dbms_output.put_line('Did not found the record number ' || (ln_my_index + 1) );

END;

END LOOP;

END IF;

IF

utl_file.is_open(lv_read_file_handle)

THEN

utl_file.fclose(lv_read_file_handle);

END IF;

IF

NOT utl_file.is_open(lv_read_file_handle)

THEN

dbms_output.put_line('File is closed successfully..!!');

dbms_output.put_line('Data ' || rec_readrecords(ln_my_index + 1) || ' read successfully');

END IF;

COMMIT;

EXCEPTION

WHEN utl_file.invalid_operation THEN

dbms_output.put_line('invalid operation ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_path THEN

dbms_output.put_line('invalid path ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_mode THEN

dbms_output.put_line('invalid mode ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_filehandle THEN

dbms_output.put_line('invalid filehandle ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.read_error THEN

dbms_output.put_line('read error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.internal_error THEN

dbms_output.put_line('internal error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.write_error THEN

dbms_output.put_line('write error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.access_denied THEN

dbms_output.put_line('access denied ' || sqlerrm);

utl_file.fclose_all;

END;

SET SERVEROUTPUT ON

CLEAR SCREEN

/*Note : This code has issue

--SELECT * FROM all_directories;

--The directory name should be considered one of the value from value column in below query

--SELECT * FROM v$parameter WHERE name like '%utl_file%';

--The privilege should be execute in below query

--SELECT * FROM all_tab_privs where table_name = 'UTL_FILE';

--Check this directory has read and write privileges in below query

--SELECT * FROM all_tab_privs where table_name = 'ODPDIR';*/

DECLARE

lv_file_handle utl_file.file_type;

lv_dir_name all_directories.directory_name%TYPE := 'Specify Directory Name';

lv_file_name VARCHAR2(100) := 'CUSTOMER_DATA.dat';

lv_file_output VARCHAR2(1000);

BEGIN

lv_file_handle := utl_file.fopen(lv_dir_name,lv_file_name,'R',32767);

IF

utl_file.is_open(lv_file_handle)

THEN

dbms_output.put_line('File Opened Successfully');

END IF;

utl_file.get_line(lv_file_handle,lv_file_output);

dbms_output.put_line('Loading data to DB has started');

INSERT INTO cust_stg_tab VALUES lv_file_output;

dbms_output.put_line('Loading data to DB has completed');

IF

SQL%found

THEN

dbms_output.put_line(SQL%rowcount || 'rows loaded successfully');

COMMIT;

END IF;

utl_file.fclose(lv_file_handle);

EXCEPTION

WHEN utl_file.invalid_operation THEN

dbms_output.put_line('invalid operation ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_path THEN

dbms_output.put_line('invalid path ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_mode THEN

dbms_output.put_line('invalid mode ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_filehandle THEN

dbms_output.put_line('invalid filehandle ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.read_error THEN

dbms_output.put_line('read error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.internal_error THEN

dbms_output.put_line('internal error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.write_error THEN

dbms_output.put_line('write error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.access_denied THEN

dbms_output.put_line('access denied ' || sqlerrm);

utl_file.fclose_all;

END;

SET SERVEROUTPUT ON

CLEAR SCREEN

DECLARE

CURSOR c_data IS SELECT org_id

,invoice_id

,invoice_num

FROM ap_invoices_all

WHERE ROWNUM < 5;

v_file utl_file.file_type;

BEGIN

v_file := utl_file.fopen(

location => 'HR_OUTPUT'

,filename => 'write_file.txt'

,open_mode => 'W'

,max_linesize => 32767

);

FOR cur_rec IN c_data LOOP

utl_file.put_line(

v_file

,cur_rec.org_id || ',' || cur_rec.invoice_id || ',' || cur_rec.invoice_num

);

END LOOP;

utl_file.fclose(v_file);

EXCEPTION

WHEN utl_file.invalid_operation THEN

dbms_output.put_line('invalid operation ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_path THEN

dbms_output.put_line('invalid path ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_mode THEN

dbms_output.put_line('invalid mode ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.invalid_filehandle THEN

dbms_output.put_line('invalid filehandle ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.read_error THEN

dbms_output.put_line('read error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.internal_error THEN

dbms_output.put_line('internal error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.write_error THEN

dbms_output.put_line('write error ' || sqlerrm);

utl_file.fclose_all;

WHEN utl_file.access_denied THEN

dbms_output.put_line('access denied ' || sqlerrm);

utl_file.fclose_all;

END;

CREATE OR REPLACE PROCEDURE xxgl_one_coa_balances (

errbuff OUT VARCHAR2,

retcode OUT VARCHAR2,

p_company IN VARCHAR2,

p_period_name IN VARCHAR2,

p_ledger_name IN VARCHAR2,

budget_version_id IN NUMBER

) IS

BEGIN

DECLARE

c_version_id NUMBER := 12345;

c_sysdate_initial VARCHAR2(100);

c_sysdate_final VARCHAR2(100);

l_errbuff VARCHAR2(100);

l_retcode VARCHAR2(100) := NULL;

b_comp VARCHAR2(100);

------------------------------------------------------------------------------------------------------------------------------------------

v_utlfile utl_file.file_type;

filedate VARCHAR2(50) := TO_CHAR(

SYSDATE,

'YYYYMMDDHH24MISS'

);

filename VARCHAR2(100) := 'GL_BALANCES' || '_' || p_period_name || '_' || TO_CHAR(

SYSDATE,

'DD-MON-YYYY-HH-MI-SS'

);

fileextension VARCHAR2(10) := 'txt';

v_filename VARCHAR2(50) := filename || '.' || fileextension;

v_directory_name dba_directories.directory_path%TYPE := 'HR_OUTPUT'; --'/u01/oraout/xxDEBSD0/outbound';

l_column_name VARCHAR2(2000);

l_column_values VARCHAR2(2000);

v_count NUMBER;

lv_last_run_date fnd_concurrent_requests.actual_completion_date%TYPE;

l_last_run_date fnd_concurrent_requests.actual_completion_date%TYPE;

--- For moving file from DB to appl server

l_num_conc_req_id NUMBER;

l_conc_req_id NUMBER;

p_host_name xx_wrapper_job_runs.server_name%TYPE := NULL;

l_source_path_tier xx_wrapper_job_runs.source_file%TYPE := NULL;

l_dest_path_tier xx_wrapper_job_runs.source_file%TYPE := NULL;

l_source_path_tier_arch xx_wrapper_job_runs.source_file%TYPE := NULL;

l_dest_path_tier_arch xx_wrapper_job_runs.source_file%TYPE := NULL;

l_phase VARCHAR2(30);

l_status VARCHAR2(30);

l_dev_phase VARCHAR2(30);

l_dev_status VARCHAR2(30);

l_message VARCHAR2(100);

l_num_interval NUMBER := 20;

l_num_max_wait NUMBER := 1800;

l_success BOOLEAN;

l_debit_sum NUMBER;

l_credit_sum NUMBER;

v_resp_name VARCHAR2(500);

------------------------------------------------------------------------------------------------------------------------------------------

CURSOR gl_balance (

b_period_name VARCHAR2,

b_company VARCHAR2,

b_gl_name VARCHAR2,

b_budget_id NUMBER

) IS

SELECT /*+ PARALLEL */ gl.name "LEDGER",

'New COA Conversion' "CATEGORY",

'COA Conversion' "SOURCE",

gb.currency_code "CURRENCY",

gp.period_name "PERIOD_NAME",

gp.end_date "ACCOUNTING_DATE",

'B' "BUDGET_NAME",

b_budget_id "BUDGET_VERSION_ID",

gcck.concatenated_segments "ORIGINAL_COA",

DECODE(

gb.period_net_dr,

0,

NULL,

gb.period_net_dr

) "DEBIT",

DECODE(

gb.period_net_cr,

0,

NULL,

gb.period_net_cr

) "CREDIT"

FROM gl_balances gb,

gl_ledgers gl,

gl_periods gp,

gl_code_combinations_kfv gcck,

fnd_flex_value_rule_usages ffvru,

fnd_flex_value_rules_vl ffvrv,

fnd_flex_value_rule_lines ffvrl

WHERE 1 = 1

AND gb.code_combination_id = gcck.code_combination_id

AND gb.period_name = gp.period_name

AND gb.ledger_id = gl.ledger_id

AND gb.currency_code = gl.currency_code -- Restricting to functional currency data

AND gb.actual_flag = 'A'

AND gl.short_name = b_gl_name

AND gcck.segment1 = b_company

AND gb.period_name = b_period_name

AND ffvrl.include_exclude_indicator = 'I'

AND b_company BETWEEN ffvrl.flex_value_low AND ffvrl.flex_value_high

AND NOT EXISTS ( SELECT 1

FROM fnd_flex_value_rule_lines fvrl2

WHERE fvrl2.flex_value_rule_id = ffvrl.flex_value_rule_id

AND fvrl2.include_exclude_indicator = 'E'

AND ( b_company BETWEEN fvrl2.flex_value_low AND fvrl2.flex_value_high )

)-- for some ou's first all are included and then some of them are excluded. Hence, this logic is required

AND ffvru.responsibility_id = fnd_profile.value(

'RESP_ID'

) -- For current resp

AND ffvru.flex_value_rule_id = ffvrv.flex_value_rule_id

AND ffvrl.flex_value_rule_id = ffvrv.flex_value_rule_id

AND ( gb.period_net_cr != 0

OR gb.period_net_dr != 0 )

ORDER BY 8;

BEGIN

SELECT TO_CHAR(

SYSDATE,

'MM/DD/YY HH24:MI:SS'

)

INTO c_sysdate_initial

FROM dual;

fnd_file.put_line(

fnd_file.log,

'Time Before Extraction ' || c_sysdate_initial

);

fnd_file.put_line(

fnd_file.log,

'Starting extraction of Period ' || p_period_name

);

fnd_file.put_line(

fnd_file.log,

'Starting extraction of Company ' || p_company

);

fnd_file.put_line(

fnd_file.log,

'Starting extraction of Ledger ' || p_ledger_name

);

fnd_file.put_line(

fnd_file.log,

'Starting extraction of Budget Version ID ' || c_version_id

);

v_resp_name := fnd_profile.value('RESP_NAME');

SELECT TO_CHAR(

SYSDATE,

'MM/DD/YY HH24:MI:SS'

)

INTO c_sysdate_final

FROM dual;

fnd_file.put_line(

fnd_file.log,

'Time After Extraction ' || c_sysdate_final

);

BEGIN

l_conc_req_id := fnd_global.conc_request_id;

-- To Fetch Instance Name

SELECT host_name

INTO p_host_name

FROM v$instance;

fnd_file.put_line(

fnd_file.log,

'Database Server - ' || p_host_name

);

-- To Fetch source directory path

--

SELECT directory_path

INTO l_source_path_tier

FROM all_directories

WHERE directory_name = v_directory_name;

dbms_output.put_line('Before Opening UTL File');

v_count := 0;

v_utlfile := utl_file.fopen(

v_directory_name,

v_filename,

'W',

32767

);

l_column_name := 'LEDGER' || chr(9) || 'CATEGORY' || chr(9) || 'SOURCE' || chr(9) || 'CURRENCY' || chr(9) || 'PERIOD_NAME' || chr(9) || 'ACCOUNTING_DATE' || chr(9) || 'BUDGET_NAME' || chr(9) || 'BUDGET_VERSION_ID' || chr(9)

--|| 'COMPANY' || chr(9) || 'ACCOUNT' || chr(9) || 'COST_CENTRE' || chr(9) || 'BUSINESS_UNIT' || chr(9) || 'CATEGORY_CODE' || chr(9) || 'PROJECT' || chr(9) || 'ACTIVITY' || chr(9) || 'SPARE'

|| 'ORIGINAL_COA' || chr(9) || 'DEBIT' || chr(9) || 'CREDIT';

utl_file.put_line(

v_utlfile,

l_column_name

);

FOR cp IN ( SELECT TRIM(regexp_substr(

p_company,

'[^,]+',

1,

level

)) txt

FROM dual CONNECT BY

regexp_substr(

p_company,

'[^,]+',

1,

level

) IS NOT NULL

) LOOP

fnd_file.put_line(

fnd_file.log,

'Start of loading ' || cp.txt

);

b_comp := cp.txt;

FOR rec_balance IN gl_balance(

p_period_name,

b_comp,

p_ledger_name,

c_version_id

) LOOP

v_count := v_count + 1;

l_column_values := rec_balance.ledger || chr(9) || rec_balance.category || chr(9) || rec_balance.source || chr(9) || rec_balance.currency || chr(9) || rec_balance.period_name || chr(9) || rec_balance.accounting_date || chr(9) || rec_balance.budget_name || chr(9) || rec_balance.budget_version_id

|| chr(9) ||

--rec_balance.company || chr(9) || rec_balance.account || chr(9) || rec_balance.cost_centre || chr(9) || rec_balance.business_unit || chr(9) || rec_balance.category_code || chr(9) || rec_balance.project || chr(9) || rec_balance.activity || chr(9) || rec_balance.spare

rec_balance.original_coa || chr(9) || rec_balance.debit || chr(9) || rec_balance.credit;

utl_file.put_line(

v_utlfile,

l_column_values

);

END LOOP;

END LOOP;

fnd_file.put_line(

fnd_file.log,

'Total records fetched ' || v_count

);

dbms_output.put_line('..After Cursor cur_vendor');

utl_file.fclose(v_utlfile);

END;

-- XCOM

l_source_path_tier_arch := l_source_path_tier;

l_dest_path_tier := l_source_path_tier || '/stack' || '/' || v_filename;

l_source_path_tier := l_source_path_tier || '/' || v_filename;

INSERT INTO xx_wrapper_job_runs (

run_id,

run_date,

request_id,

program_name,

server_name,

source_file,

destination_file,

put_get

) VALUES (

xx_wrapper_job_runs_seq.NEXTVAL,

SYSDATE,

l_conc_req_id,

'xxGL ONE COA Balance Extraction',

p_host_name,

l_source_path_tier,

l_dest_path_tier,

'G'

);

COMMIT;

--

-- To xcom the file from Database tier to Application tier

--

fnd_file.put_line(

fnd_file.log,

'Submitting Xcom Job'

);

l_num_conc_req_id := fnd_request.submit_request(

application => 'xx',

program => 'xxFINXCOM',

description => NULL,

start_time => NULL,

sub_request => false -- not a child request

,

argument1 => l_conc_req_id,

argument2 => 'xxGL ONE COA Balance Extraction'

);

COMMIT;

fnd_file.put_line(

fnd_file.log,

'Xcom Job Completed Request Id - ' || l_num_conc_req_id

);

IF l_num_conc_req_id = 0 THEN

-- concurrent request failed

errbuff := 'Submission of Xcom program failed';

retcode := 2;

fnd_file.put_line(

fnd_file.log,

errbuff

);

ELSE

l_success := fnd_concurrent.wait_for_request(

l_num_conc_req_id,

l_num_interval,

l_num_max_wait,

l_phase,

l_status,

l_dev_phase,

l_dev_status,

l_message

);

fnd_file.put_line(

fnd_file.log,

'Status: ' || l_phase || ' ' || l_status

);

fnd_file.put_line(

fnd_file.log,

'Completion text: ' || l_message

);

IF upper(l_phase) = 'COMPLETED' THEN

IF upper(l_status) = 'NORMAL' THEN

retcode := 0;

fnd_file.put_line(

fnd_file.log,

'Successfully transferred file from DB tier to app tier'

);

IF utl_file.is_open(v_utlfile) THEN

utl_file.fclose(v_utlfile);

END IF;

-- utl_file.fremove(v_directory_name, v_filename);

ELSE

fnd_file.put_line(

fnd_file.log,

'XCom program ended with status :' || l_phase

);

retcode := 2;

END IF;

ELSE

errbuff := 'XCom program has not completed after waiting for :' || l_num_max_wait;

fnd_file.put_line(

fnd_file.log,

errbuff

);

retcode := 2;

END IF;

END IF;

--- Xcom to Archive folder

l_dest_path_tier_arch := l_source_path_tier_arch || '/archive/' || v_filename;

l_source_path_tier_arch := l_source_path_tier_arch || '/' || v_filename;

INSERT INTO xx_wrapper_job_runs (

run_id,

run_date,

request_id,

program_name,

server_name,

source_file,

destination_file,

put_get

) VALUES (

xx_wrapper_job_runs_seq.NEXTVAL,

SYSDATE,

l_conc_req_id,

'xxGL ONE COA Balance Extraction Archive',

p_host_name,

l_source_path_tier_arch,

l_dest_path_tier_arch,

'G'

);

COMMIT;

fnd_file.put_line(

fnd_file.log,

'Submitting Xcom Job to copy file to archive folder'

);

l_num_conc_req_id := fnd_request.submit_request(

application => 'xx',

program => 'xxFINXCOM',

description => NULL,

start_time => NULL,

sub_request => false -- not a child request

,

argument1 => l_conc_req_id,

argument2 => 'xxGL ONE COA Balance Extraction Archive'

);

COMMIT;

fnd_file.put_line(

fnd_file.log,

'Xcom Job Completed Request Id - ' || l_num_conc_req_id

);

IF l_num_conc_req_id = 0 THEN

-- concurrent request failed

errbuff := 'Submission of Xcom program failed';

retcode := 2;

fnd_file.put_line(

fnd_file.log,

errbuff

);

ELSE

l_success := fnd_concurrent.wait_for_request(

l_num_conc_req_id,

l_num_interval,

l_num_max_wait,

l_phase,

l_status,

l_dev_phase,

l_dev_status,

l_message

);

fnd_file.put_line(

fnd_file.log,

'Status: ' || l_phase || ' ' || l_status

);

fnd_file.put_line(

fnd_file.log,

'Completion text: ' || l_message

);

IF upper(l_phase) = 'COMPLETED' THEN

IF upper(l_status) = 'NORMAL' THEN

retcode := 0;

fnd_file.put_line(

fnd_file.log,

'Successfully transferred file from DB tier to app tier archive. Deleting file from Db tier'

);

IF utl_file.is_open(v_utlfile) THEN

utl_file.fclose(v_utlfile);

END IF;

utl_file.fremove(

v_directory_name,

v_filename

);

ELSE

fnd_file.put_line(

fnd_file.log,

'XCom program ended with status :' || l_phase

);

retcode := 2;

END IF;

ELSE

errbuff := 'XCom program has not completed after waiting for :' || l_num_max_wait;

fnd_file.put_line(

fnd_file.log,

errbuff

);

retcode := 2;

END IF;

END IF;

retcode := l_retcode;

EXCEPTION

WHEN utl_file.invalid_path THEN

fnd_file.put_line(

apps.fnd_file.log,

'TRIAL BALANCE_extract-Invalid Output Path: ' || ' - ' || sqlcode || ' - ' || sqlerrm

);

fnd_file.put_line(

apps.fnd_file.log,

' No OutPut File is Generated..... '

);

utl_file.fclose(v_utlfile);

WHEN utl_file.invalid_mode THEN

fnd_file.put_line(

apps.fnd_file.log,

'TRIAL BALANCE-INVALID_MODE: ' || sqlcode || ' - ' || sqlerrm

);

fnd_file.put_line(

apps.fnd_file.log,

' No OutPut File is Generated..... '

);

utl_file.fclose(v_utlfile);

WHEN utl_file.invalid_filehandle THEN

fnd_file.put_line(

apps.fnd_file.log,

'TRIAL BALANCE-INVALID_FILEHANDLE: ' || sqlcode || ' - ' || sqlerrm

);

fnd_file.put_line(

apps.fnd_file.log,

' No OutPut File is Generated..... '

);

utl_file.fclose(v_utlfile);

WHEN utl_file.invalid_operation THEN

fnd_file.put_line(

apps.fnd_file.log,

'TRIAL BALANCE-INVALID_OPERATION: ' || sqlcode || ' - ' || sqlerrm

);

fnd_file.put_line(

apps.fnd_file.log,

' No OutPut File is Generated..... '

);

utl_file.fclose(v_utlfile);

WHEN utl_file.write_error THEN

fnd_file.put_line(

apps.fnd_file.log,

'TRIAL BALANCE-An error occured writing data into output file: ' || sqlcode || ' - ' || sqlerrm

);

fnd_file.put_line(

apps.fnd_file.log,

' No OutPut File is Generated..... '

);

utl_file.fclose(v_utlfile);

WHEN OTHERS THEN

IF utl_file.is_open(v_utlfile) THEN

utl_file.fclose(v_utlfile);

utl_file.fremove(

v_directory_name,

v_filename

);

END IF;

fnd_file.put_line(

apps.fnd_file.log,

errbuff

);

fnd_file.put_line(

apps.fnd_file.log,

sqlerrm

);

retcode := 2;

END;

END xxgl_one_coa_balances;

10. External Table

Loading data from excel (any legacy system) to DB.

Prior to creation of external table we need to place the data file at specified location (/usr/tmp) and please ensure the file gets placed in DB tier rather than application tier. Also make sure you remove all the comments (–) from below syntax, else results in error while using SELECT query.

Syntax:

SET SERVEROUTPUT ON

CLEAR SCREEN

CREATE TABLE cust_stg_tab (

customer_id NUMBER

,customer_name VARCHAR2(100)

,account_number VARCHAR2(100)

)

ORGANIZATION EXTERNAL ( TYPE oracle_loader

DEFAULT DIRECTORY ecx_utl_xslt_dir_obj /*Directory name from DBA directories table (dba_directories)*/

ACCESS PARAMETERS (

RECORDS DELIMITED BY NEWLINE

CHARACTERSET 'WE8MSWIN1252'

LOGFILE 'CUST_STG_TAB.log' /*log file name*/

BADFILE 'CUST_STG_TAB.bad' /*bad file name*/

SKIP 1

FIELDS TERMINATED BY ',' OPTIONALLY ENCLOSED BY '"' MISSING FIELD VALUES ARE NULL

) LOCATION ( ecx_utl_xslt_dir_obj :'CUSTOMER_DATA.csv' )/*Data file placed at the Specified Path*/

) REJECT LIMIT 0

NOPARALLEL

nomonitoring;

Now check SELECT * FROM CUST_STG_TAB, two records will be shown.

Subsequently the data file need to be replaced in the directory to load new data.

Difference between SQL *Loader and External Table

| SQL * Loader | External Table |

| This is a command line utility to load data from flat files to staging tables. | The flat file data can be accessed directly as if it were in database table. |

| Can be loaded from any system having Oracle client installed | The files has to be accessed from system where DB is installed via pre-defined directory |

11. Formatting Month using XDOXSLT

Set month which has date format like 20-NOV-2015

<?xdoxslt:set_variable($_XDOCTX, ‘P_START_DATE’,xdoxslt:format_date(START_DATE,’mmyyyy’,’yyyy/mmm/dd’,$_XDOLOCALE, $_XDOTIMEZONE))?>

<?xdoxslt:set_variable($_XDOCTX, ‘P_END_DATE’,xdoxslt:format_date(START_DATE,’mmyyyy’,’yyyy/mmm/dd’,$_XDOLOCALE, $_XDOTIMEZONE))?>

Comparing with month

<?if:xdoxslt:format_date(LEAVE_START_DATE,’MMyyyy’,’dd-MMM-yyyy’,$_XDOLOCALE, $_XDOTIMEZONE)= xdoxslt:get_variable($_XDOCTX, ‘P_START_DATE’) or

xdoxslt:format_date(LEAVE_END_DATE,’MMyyyy’,’dd-MMM-yyyy’,$_XDOLOCALE, $_XDOTIMEZONE)= xdoxslt:get_variable($_XDOCTX, ‘P_START_DATE’)?>

12. Difference between Lexical Parameter and Bind Variable in Oracle Reports

13. System Parameters used in Reports Builder

BACKGROUND, COPIES, CURRENCY, DECIMAL, DESFORMAT, DESNAME, DESTYPE, MODE, ORIENTATION, PRINTJOB, THOUSANDS

Difference between Compile and Incremental Compile

In Compile all the PL/SQL within the reports will be compiled whereas in Incremental Compile only the modified the PL/SQL code gets compiled. When compiling for the first time, we should always go with Compile rather than Incremental Compile.

14. Discoverer Reports

We have four categories in Discoverer.

1. Discoverer Administration Edition– Application based

2. Discoverer Desktop Edition — Application based

3. Discoverer Plus — Browser based

4. Discoverer Viewer — Browser based

Discoverer Administration Edition — We create Business area >> Folders >> SQL Queries.

Administrator and desktop editions both use same application.